welcome to learnaimind .AI is everywhere. It writes, recommends, diagnoses, approves, and denies. That is why AI Ethics cannot be a poster on a wall. It must guide daily choices. AI ethics guidelines are simple rules and practices that help teams build and use AI in a way that is fair, safe, private, and accountable. In 2025, new rules and higher public expectations raise the stakes. Trust is currency. Safety is not optional.

Global principles from the OECD, UNESCO, and the EU expert community point to a shared core. Fairness, transparency, accountability, privacy, safety, human oversight, and the common good. Get these right and you earn clear benefits: stronger user trust, fewer harms, better products, and faster compliance. Get them wrong and you risk ethics washing, where values live in slides but never touch code.

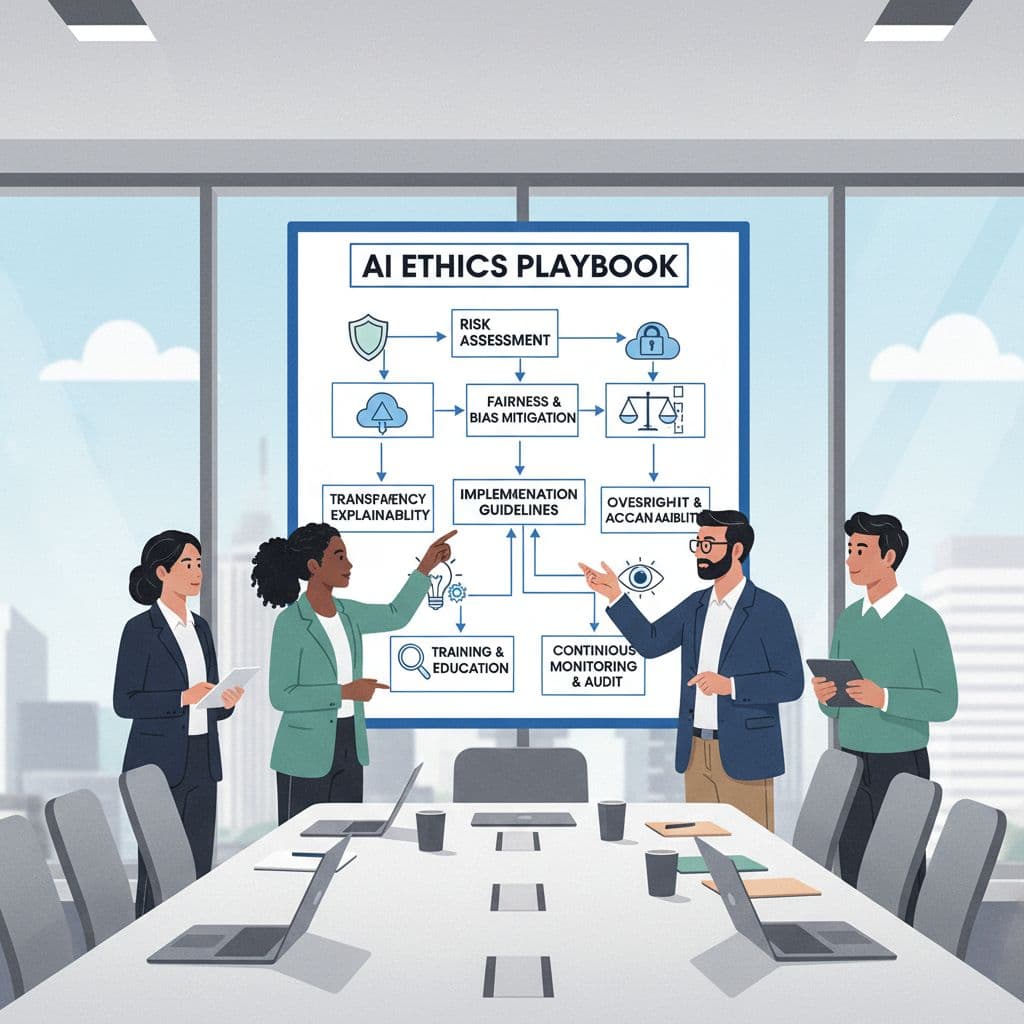

This guide shows what works now. First, the core principles in plain language. Second, a simple playbook your teams can use this week. Third, the key laws and standards to know. Finally, how to prove your program delivers results.

Why AI Ethics Guidelines Matter in 2025: trust, safety, and real results

Everyday risks are growing. Biased systems can harm users. Poor data handling can leak private facts. Generative models can produce unsafe content. Attackers can misuse tools. People who are hurt can struggle to get answers or a fix.

Clear AI ethics guidelines reduce these risks. They set shared rules for teams.They align with leading principles from OECD AI Principles and UNESCO’s Recommendation on AI Ethics. You do not need a law degree to act on them. You need clear owners, checks, and evidence.

Two quick examples show the point. A hiring tool with no bias checks can favor one group. A simple fix is to define a fairness goal, like similar selection rates across qualified groups. Test it before launch, after changes, and in production. A healthcare chatbot that gives confident but wrong medical advice can cause harm. Add confidence thresholds and hand off higher risk cases to a clinician.

The business case is strong. Clear rules reduce incidents, speed approvals, and build trust with users and regulators. The risk is ethics washing. Pretty words are not proof. This post shows how to measure progress and share evidence leaders can trust.

Core AI Ethics principles in plain language you can apply today

These principles match widely cited sources and should guide the full AI life cycle, from idea to retirement. They help teams think about people, not just models. Social and environmental well being should be considered across all steps, not as an afterthought or a separate box to tick.

Fairness and non-discrimination: reduce bias and test for equal outcomes

Fairness means people get fair treatment regardless of who they are. Biased outcomes happen when data is skewed, labels reflect past bias, or the model learns shortcuts.

Two steps help most teams:

- Choose clear fairness metrics for your use case. For hiring, a simple example is selection rate parity, where qualified groups have similar selection rates.

- Test across relevant groups before launch and after updates. Keep testing in production.

Invite domain experts and affected users into reviews. They can spot gaps your team misses. Call out tradeoffs. For example, a fraud model may trade a small accuracy gain for a large fairness loss. Write that down and decide with the right owners.

Transparency and explainability people can understand

Transparency means being upfront about data, purpose, and limits. Explainability means giving reasons a person can grasp.

Use three layers:

- User facing summaries, like what the system does, what it does not do, and where to get help.

- Decision reasons for affected people, like why a loan was denied and what could change the outcome.

- Technical docs for auditors, like model cards, data sources, limits, and test results.

Tools help. Model cards, decision logs, and audit trails keep records that teams can review and improve.

Accountability and clear responsibility from start to finish

Real people own outcomes. Map roles, and keep them visible:

- Product owner, responsible for user impact and approvals.

- Data steward, responsible for data sourcing and use.

- Model owner, responsible for performance and risk controls.

- Reviewer, responsible for independent checks.

Require sign offs at key gates. Keep an issue tracker for harms and fixes. Make it simple to report problems. Close the loop and share what you learned with teams who build next.

Privacy and data protection by design

Privacy starts with restraint. Collect only what you need for a clear purpose, keep it only as long as needed, and get valid consent when required.

Use these techniques:

- De identification and aggregation where possible.

- Access controls and key management for sensitive data.

- Privacy reviews for new features and data flows.

Honor user rights. Support access, correction, and deletion. A short training data checklist helps:

- Do we have a lawful basis or consent?

- Did we remove direct identifiers where we can?

- Are sensitive fields protected or excluded?

- Do prompts and logs collect any personal data, and if so, why?

Safety, reliability, and human oversight

Safety means preventing harm. Reliability means consistent performance in the intended context.

Put people in the loop for higher risk use cases. Set clear fallback plans if the model is unsure or fails. Stress test before launch. Run red team tests for safety and misuse. Include misuse scenarios like prompt injection, model spoofing, and data exfiltration. Log incidents and responses in one system so teams can learn and fix fast.

Turn principles into practice: a simple AI Ethics playbook

This action plan turns values into work. It sets steps, owners, and artifacts teams can use this week.

Set scope and rate risk before you build

Define the use case, users, and expected impact. Classify risk levels based on context and stakes, like low, medium, or high. Flag high risk areas early. Require extra review for high risk. Record decisions and owners in a one page brief that anyone can read.

Data governance that protects people and quality

Create a data map that lists sources, consent terms, and sensitive fields. Set rules for source approval and retention. Track dataset lineage across versions. Run quality checks for drift and gaps. Store dataset cards with owners and update dates. Make this a habit, not a one time task.

Bias testing and mitigation you can repeat

Pick fairness metrics that fit the task, not a one size rule. Test pre training, during training, and after deployment. Use representative test sets with enough data for each group. Apply mitigation methods like reweighting, data repair, or threshold tuning. Re test after each change. Report results in simple charts with plain labels.

Human oversight and fallback plans that work under pressure

Define when a person must review or approve outcomes. Set confidence thresholds for escalation. Give reviewers clear guidance and training. Add safe stop, appeal, and withdrawal options for users. Publish response times so people know what to expect.

Monitoring, incident response, and audits

Track key metrics in production. Bias drift, error rates, override rates, user complaints, and data subject requests. Define what counts as an incident and how to respond. Set up a hotline or inbox for reports. Schedule internal audits and fix deadlines. Share short postmortems with teams and add lessons to your playbook.

For a workflow many teams use, see the NIST AI Risk Management Framework, which groups work into govern, map, measure, and manage. Use it to shape your risk register, measurement plans, and evaluation reports.

Laws and standards to know in 2025, in plain English

This section is informational, not legal advice. The goal is to map each item to actions teams can take. Tie actions to the principles above and keep records you can show.

EU AI Act: what product and data teams should do

The EU AI Act sets risk categories and stronger duties for high risk systems. It also sets rules for general purpose models. High risk teams must keep documentation, improve data quality, add human oversight, log events, and offer clear notices.

Practical actions:

- Rate your system’s risk level and record why.

- Keep data quality and lineage records.

- Define human oversight and escalation rules.

- Log key decisions and model events.

- Publish brief transparency notices users can read.

For context and updates, see the EU’s page on the AI Act regulatory framework and the Parliament’s summary of the first regulation on AI.

NIST AI Risk Management Framework: use it as a workflow

Use NIST AI RMF as a simple workflow:

- Govern: define roles, policies, and risk appetite.

- Map: describe the system, its context, and impacts.

- Measure: select metrics, design tests, and gather evidence.

- Manage: fix, monitor, and improve over time.

Artifacts to keep:

- A risk register tied to use cases.

- A measurement plan with metrics and thresholds.

- Evaluation reports with test data and results.

You can start with the core guide here: NIST AI Risk Management Framework.

ISO/IEC 42001: build an AI management system

Think of ISO/IEC 42001 as a management system for AI, like ISO standards for quality or security. It sets requirements to establish, run, and improve an AI program.

What to document:

- Scope, roles, and objectives.

- Risk and impact assessments.

- Data governance procedures.

Start small. Pick one product line, build your AI management system there, then expand. The official page is here: ISO/IEC 42001:2023 – AI management systems.

OECD and UNESCO principles: align language and reports

Global principles stress human rights, fairness, transparency, accountability, privacy, safety, and social good. Map your policy terms to theirs. Reuse that mapping in reports and audits. It reduces confusion and speeds reviews.

See the OECD AI Principles overview and UNESCO’s Recommendation on the Ethics of Artificial Intelligence for common language you can adopt.

Avoid ethics washing: prove your AI Ethics program works

Ethics washing happens when a company publishes values but never changes how it builds. Evidence beats slogans. Leaders, auditors, and users want simple proof. Show what you measure, who is in charge, how you fix issues, and how users get help.

Pick clear KPIs and show real evidence

Pick a small set of KPIs and publish results:

- Bias metrics by group for top outcomes.

- Explanation coverage rate for decisions that affect people.

- Incident rate and median time to close.

- Data subject request response time.

- Audit pass rate and fix time.

Set targets. Report monthly. Show trends, not one time wins. Explain gaps and what you are doing to close them.

Put governance and roles in writing

Publish who approves what and when. Keep a RACI for ethics tasks. Require sign offs for high risk launches. Track training completion for anyone who touches models, data, or reviews. Store records where auditors can find them.

Use independent review and red teaming

Invite internal or external reviewers to test for bias, misuse, privacy leaks, and safety failures. Document findings, fixes, and retests. Share a short summary with leaders and, when you can, with users. This builds trust and improves models. For teams seeking structure to get started, Poynter’s updated starter kit on AI ethics guidelines can help with policy language.

Conclusion

AI can help, or it can harm. Strong AI Ethics guidelines turn values into daily practice.

Next week’s checklist: name owners, pick 3 to 5 metrics, map your policy to one trusted standard, and publish a user friendly summary of how your system works. Start small, measure, and improve. The goal is simple, build trustworthy AI that works for people and stands up to real tests.